December 8, 2025

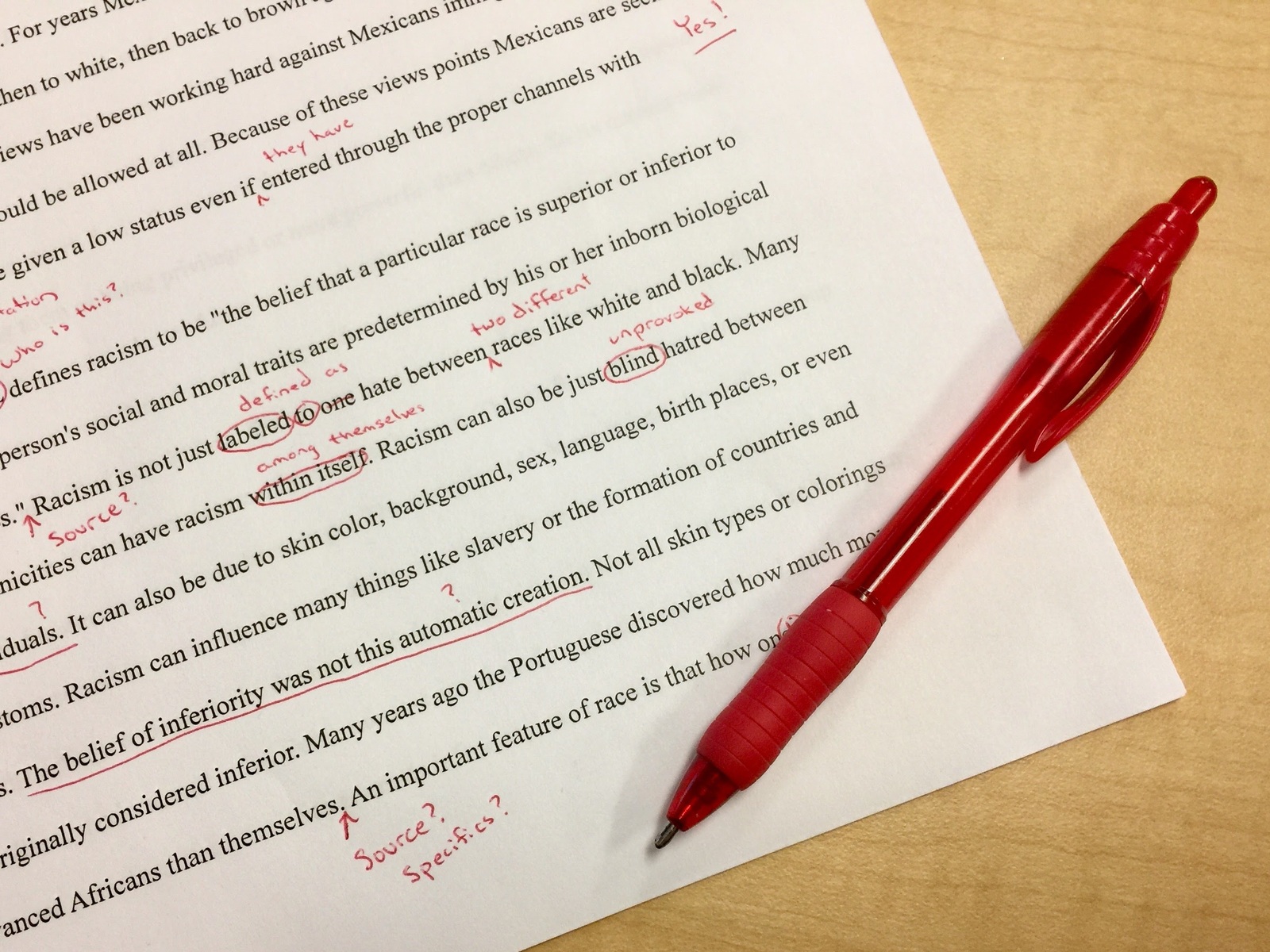

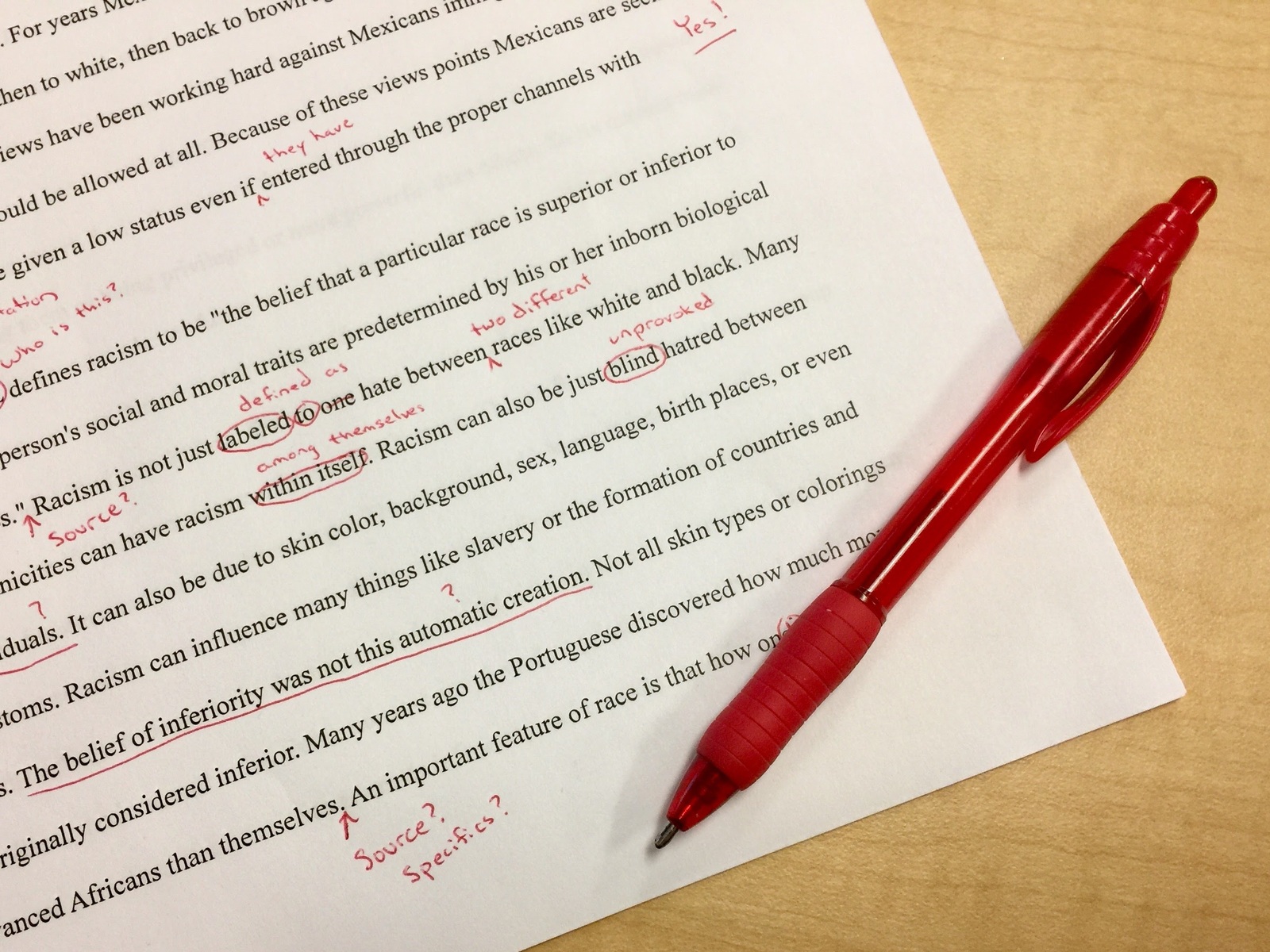

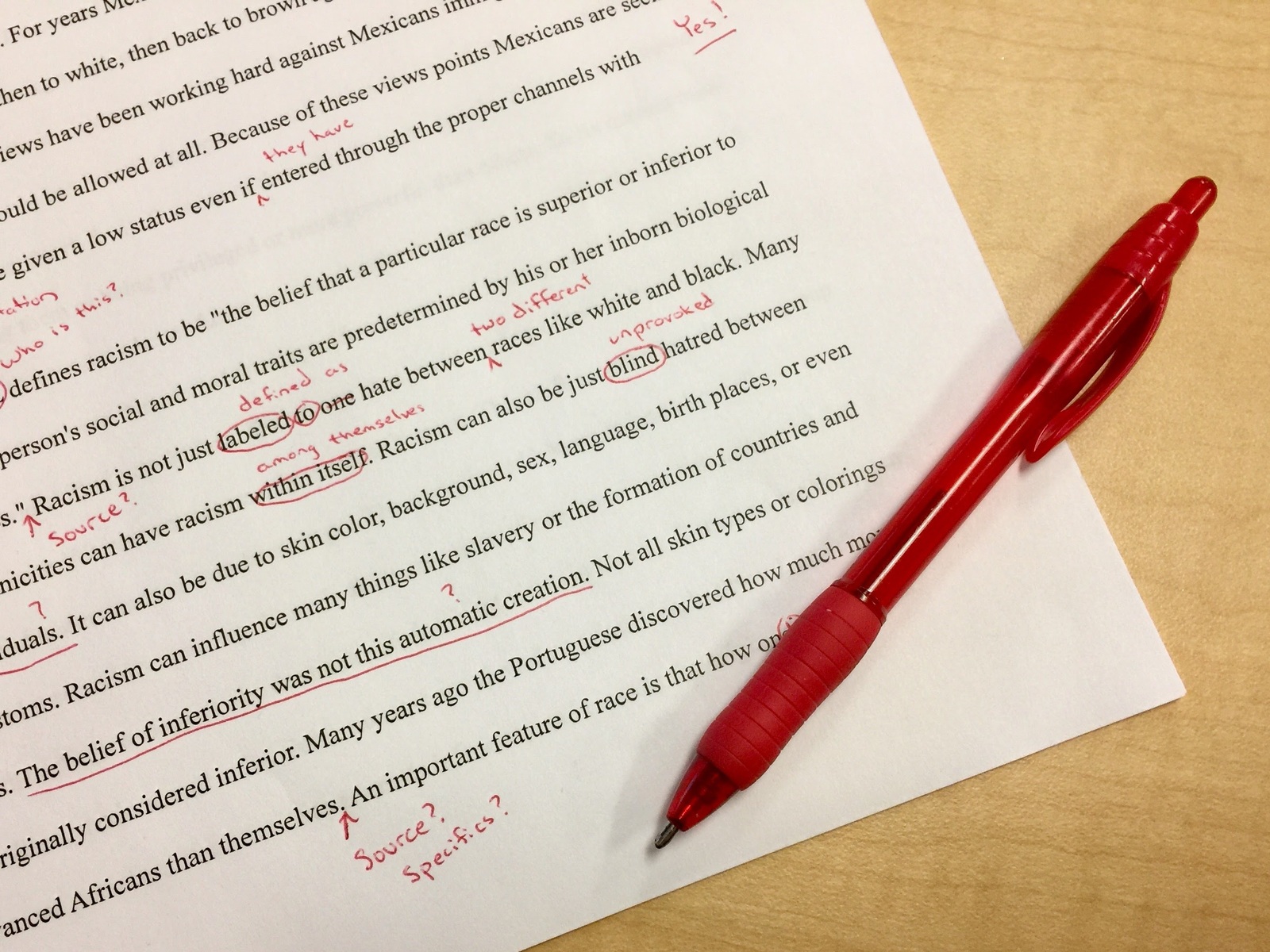

Teachers face significant challenges regarding the assessment and grading of student writing, making it a tedious and time-consuming, yet critical, everyday task. Despite the large time investment, the feedback provided to students is often delayed and extremely heterogeneous.

Artificial Intelligence (AI) and Large Language Models (LLMs) have the potential to enhance teaching and learning processes, among others to enhance the quality and consistency of student assessments and reduce teacher burden. But how good are different types of LLMs in grading essays, when compared to human assessment?

In a recent study, a research team lead by TUM’s Chair for Human-Centered Technologies for Learning tested how well LLMs can grade real German student essays. Thirty-seven teachers from different school types rated 20 authentic essays on ten criteria. Instead of only giving a single overall grade, they asked the models to rate each text on ten separate criteria related to language (e.g., spelling, punctuation, expression) and content (e.g., plot logic, structure, introduction). Their ratings were then compared with those from several AI systems, including GPT-3.5, GPT-4, the o1 model, and two open-source models (LLaMA 3, Mixtral).

You are a teacher. Analyze the essay written by a 13-year-old child according to the given criteria. Return a scalar number from 1 to 6 for each criteria. 1 means the criteria is not fulfilled at all, 6 means it is completely accomplished. Return only a JSON. ## Criteria = {criteria}; Essay = {text}; ## Rating =

Standardized zero-shot prompt employed across all

LLMs to ensure a fair comparison of their essay evaluation

performance.

Commercial systems like GPT-4 and especially o1 came closest to teacher judgments and were comparatively reliable. The open-source models, however, showed unstable and sometimes almost identical scores across different essays, making them currently unsuitable for real grading.

A closer look shows that the models do not “think” like teachers. GPT-3.5 and GPT-4 often gave very similar scores across all criteria once they had formed a general impression of an essay, suggesting a rather one-dimensional judgment. The newer o1 model showed more differentiated scoring across criteria and behaved more like human raters. While teachers paid particular attention to plot logic and the quality of the main part, GPT-4 tended to reward polished language and neat formatting.

LLM-based assessment has the potential to be a useful tool for reducing teacher workload, especially by supporting the evaluation of essays based on language-related criteria. The models rated spelling, punctuation and expression in a way that often matched teachers, but struggled with structure, plot logic and the quality of the main part. They also tended to be more generous than teachers overall. AI tools can therefore be useful as assistants for language feedback. Nonetheless, they should not replace teacher judgment, especially when it comes to ideas, arguments and storytelling quality.

PEER: https://peer-ai-tutor.streamlit.app/

Feature image: https://pxhere.com/en/photo/1032524?utm_content=shareClip&utm_medium=referral&utm_source=pxhere